AMELIA is a balanced, GPU-accelerated infrastructure hosted at IAC Naples, designed to support scientific computing, AI/ML workloads and FAIR-aligned data services within the H2IOSC federation.

Amelia was first presented at CINI Summer School in Naples on June 2025 (see poster attached)

The infrastructure was also presented at DH25 Digital Heritage – International Congress 2025 (see poster attached)

Quick overview

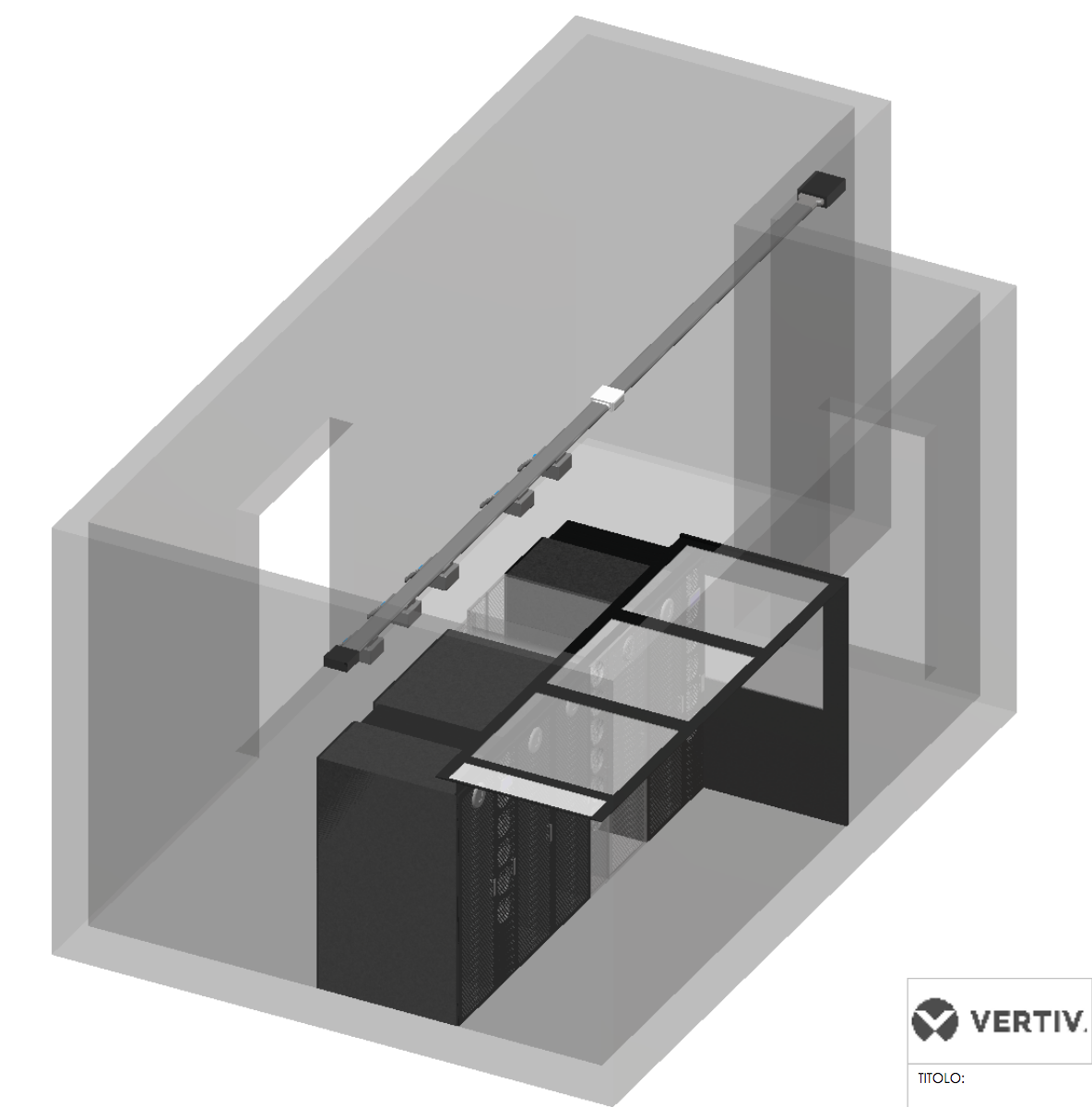

- 25 DELL PowerEdge R750 compute nodes (2× Intel Xeon Gold / node)

- 4 GPU-accelerated nodes with NVIDIA A30 (24 GB)

- Infiniband HDR 200 Gb/s fabric + Ethernet control network

- 650 TB PowerScale SAN + local scratch on each node

Key metrics

~380 TFLOPSCPU peak

~1 PFLOPGPU peak (mixed precision)

~31 kWAvg. power consumption

PUE < 1.5 Targeted datacenter efficiency

Access & Use

Interactive multi-user environment with SLURM scheduler. Containerized workflows supported (Docker / Singularity) for reproducibility and portability. Ideal for training medium-scale LLMs, numerical simulations, and hybrid CPU/GPU workloads.

Hardware & storage

- Compute: 25 × DELL PowerEdge R750, 2× Intel Xeon Gold 6883 (32 cores/socket), 1 TB RAM each.

- GPU acceleration: 4 × NVIDIA A30 (24 GB) GPU nodes, PCIe Gen4.

- Storage: 650 TB PowerScale SAN mounted as /ifs/hpc; 512 GB system disk per node; 7 TB local scratch per node.

- OS & SW: Rocky Linux 8.9, SLURM 23.11.6; OpenStack components for virtualization; Zabbix monitoring.

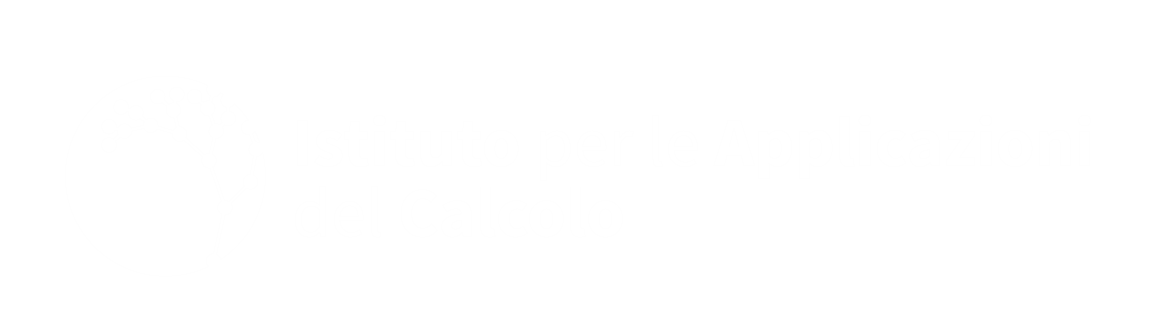

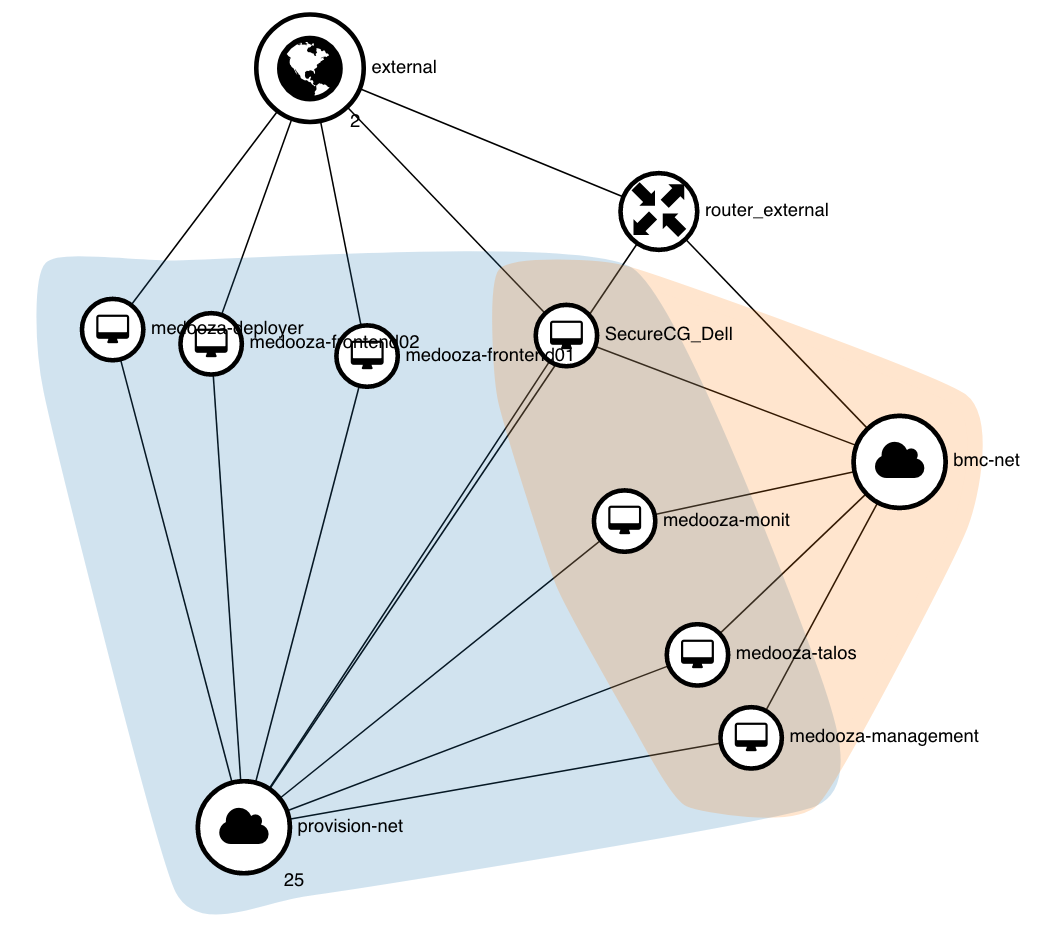

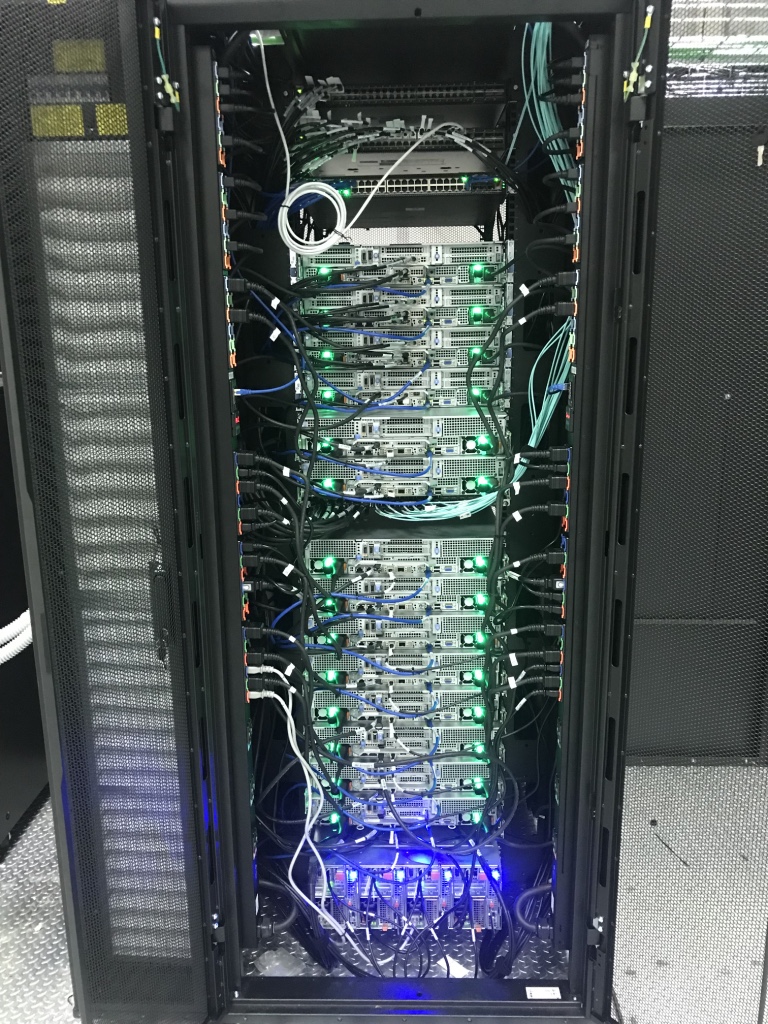

Logical & physical architecture

Tiered design separating control, compute and data planes; dense rack deployment with island containment for cooling efficiency.

Control Layer Management, scheduler (SLURM), monitoring (Zabbix), OpenStack Compute Layer 25 × CPU nodes (DELL R750) GPU Layer 4 × NVIDIA A30 nodes Data Layer 650 TB PowerScale SAN + local scratch (/scratch/local) mounted as /ifs/hpc Infiniband HDR 200 Gb/s

Software & workflows

Containerized workflows (Docker, Singularity) are supported to ensure reproducibility. SLURM is used for job scheduling and supports array jobs, GPU partitioning and QoS levels. OpenStack components provide virtualization where needed.

Energy & sustainability

Average power consumption ~31 kW (~273,000 kWh/year). PUE is consistently maintained below 1.5 through containment and optimized cooling. Estimated annual CO₂ footprint ~109 tonnes (IEA global average).

Visuals from the installation

Below are sample figures from the original installation documents (rack layout, topology, monitoring dashboards).

Contact

CNR IAC Napoli — AMELIA HPC · For access and inquiries:

Gabriella Bretti, project manager H2IOSC

Massimiliano Pedone, AMELIA technical manager

© CNR IAC